Statistics & Optimization for Trustworthy AI

Our Research

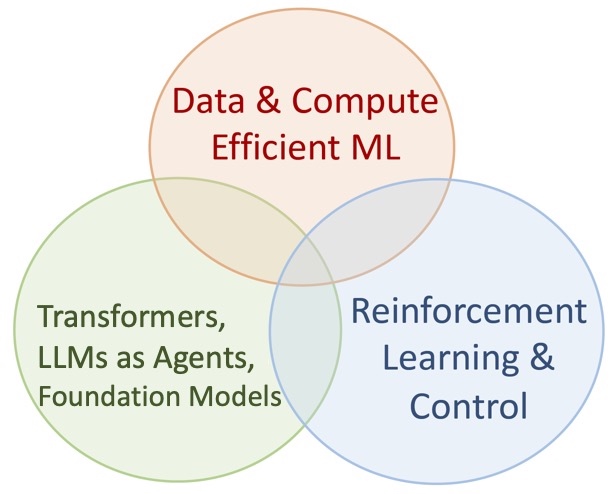

We develop principled and empirically-impactful AI/ML methods

- mathematical foundations for transformers, sequence modeling, and capabilities of language models

- core optimization and statistical learning theory

- language model reasoning and reinforcement learning

- trustworthy language and time-series (foundation) models

Recent news

- I will serve as a Senior Area Chair for NeurIPS 2025

- New preprints:

- Recent papers:

- Everything Everywhere All at Once, ICML 2025 spotlight

- Test-Time Training Provably Improves Transformers as In-context Learners, ICML 2025

- High-dimensional Analysis of Knowledge Distillation, ICLR 2025 spotlight

- Provable Benefits of Task-Specific Prompts for In-context Learning, AISTATS 2025

- AdMiT: Adaptive Multi-Source Tuning in Dynamic Environments, CVPR 2025

- New award from Amazon Research on Foundation Model Development

- 2 papers will appear at AAAI 2025

- We are presenting 4 papers at NeurIPS 2024

- Congrats to Mingchen on his graduation and joining Meta as a Research Scientist!

- Congrats to our 2023 interns who will pursue their PhD studies in UC Berkeley, Harvard, and UIUC!

- Two papers at ICML 2024: Self-Attention <=> Markov Models and Can Mamba Learn How to Learn?

- New course on Foundations of Large Language Models: syllabus (including Piazza and logistics)

- New awards from NSF and ONR: We kickstarted two exciting projects to advance the theoretical and algorithmic foundations of LLMs, transformers, and their compositional learning capabilities.

- Two papers at AISTATS 2024

- “Mechanics of Next Token Prediction with Self-Attention”, Y. Li, Y. Huang, M.E. Ildiz, A.S. Rawat, S.O.

- “Inverse Scaling and Emergence in Multitask Representations“, M.E. Ildiz, Z. Zhao, S.O.

- Two papers at AAAI 2024 and one paper at WACV 2024

- Invited talks at USC, INFORMS, Yale, Google NYC, and Harvard on our works on transformer theory

- Two papers at NeurIPS 2023

- Grateful for the Adobe Data Science Research award!

- Our new works develop the optimization foundations of Transformers via SVM connection

- Two papers at ICML 2023: Transformers as Algorithms and On the Role of Attention in Prompt-tuning

- Two papers at AAAI 2023: Provable Pathways and Long Horizon Bandits

We are grateful for our research sponsors

MENU

MENU